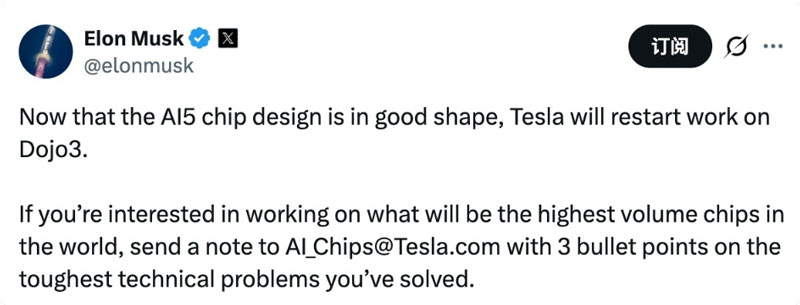

On January 19, Tesla CEO Elon Musk announced in the latest news that with the design of the next-generation AI5 chip largely completed and entering a stable phase, Tesla will officially restart the Dojo 3 supercomputer project, which had previously been put on hold. This decision is widely seen as a critical return for Tesla on its path toward self-developed AI computing power.

According to Tesla, Dojo 3, also referred to by Musk as the “AI7 form factor,” will be built on the AI5 and AI6 chip architectures, with a core focus on large-scale artificial intelligence training. The system is intended to provide high-performance, low-latency computing power for Tesla’s Full Self-Driving (FSD) system and the Optimus humanoid robot, thereby accelerating the iteration of perception, decision-making, and control models.

Musk also revealed that Tesla is significantly accelerating the pace of AI chip development, moving toward a goal of completing a major chip design iteration every nine months. In his vision, Tesla aims not only to achieve continuous and rapid chip upgrades, but also to build the world’s largest-scale AI chip production ecosystem over the medium to long term. The total volume of Tesla’s AI chips is expected to far exceed the combined output of all competing products, providing unprecedented computing power for AI training and inference.

To ensure rapid progress, Musk has publicly recruited top-tier chip design talent on X, stating that Tesla is seeking “world-class engineers.” He invited interested candidates to submit their resumes along with a brief summary of “the three hardest technical problems they have solved” to a designated recruitment email address, using this as a benchmark to identify engineers capable of major technical breakthroughs.

Industry analysts believe that the restart of the Dojo 3 project not only signals that Tesla has pressed the accelerator again in self-developed AI chips and supercomputing systems, but also reflects a long-term strategic intent to reduce reliance on external computing resources and general-purpose GPUs. As demand for computing power from FSD and humanoid robots continues to rise, this strategy is expected to further strengthen Tesla’s technological moat in autonomous driving and intelligent robotics, while exerting a profound influence on the broader AI computing landscape.