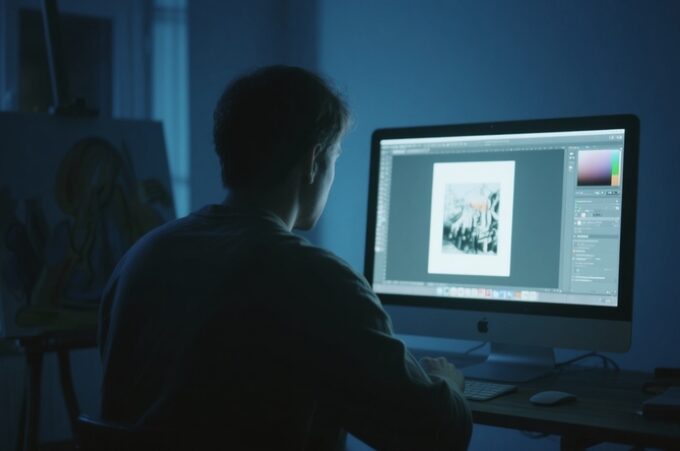

Amid the rapid advancement of artificial intelligence, behind the sanitized world presented by social media lies a global army of “invisible laborers.” Confined in cramped, dimly lit workspaces, they confront extreme content—violence, hate speech, child sexual abuse material, and more—day in and day out, sacrificing their mental health and life happiness to fuel the prosperity of the AI industry. The “algorithms safeguarding digital security” touted by tech giants are nothing but a beautiful lie; it is countless ordinary workers burdened with profound physical and psychological trauma who truly uphold the content moderation system.

Blood and Tears: The Stories of AI Laborers

Abrha, a refugee from Tigray, fled his war-torn hometown only to be unexpectedly recruited by Sama as a content moderator. His job? Reviewing content related to the very conflict that had destroyed his family. Every day, he was bombarded with hate speech targeting his people and footage of bombings—at one point even witnessing the body of his cousin. Tormented by inner agony, he picked up a heavy smoking habit, a futile attempt to alleviate his unbearable pain. When his contract ended, unemployed and adrift, he had no choice but to return to his volatile homeland, striving to rebuild a shattered life.

Kings, raised in a slum, once saw content moderation as a ticket to a better future. Yet after being exposed to child sexual abuse material (CSAM), he was plagued by relentless nightmares. The horrific images haunted him day and night, driving a wedge between him and his wife, leading to their divorce. Psychologically and financially ruined, his once-promising dreams vanished entirely.

Ranta, a South African mother, traveled to Nairobi hoping to secure a better life for her children, only to have Sama’s promises shattered. The company deducted premiums for medical insurance it never provided, denied her maternity leave as prescribed, and refused to transfer her to a less traumatic role even after her sister’s death. Long-term exposure to content involving gender-based violence, racism, and other atrocities eroded her trust in humanity, leaving her trapped in deep self-doubt.

These stories are not isolated. From Kenya to Germany, from Syrian refugee communities to Colombian slums, content moderators worldwide endure similar trauma and exploitation. They form the most hidden link in the AI industry chain, yet their struggles remain largely unseen—even as the latest AI trend continues to expand without accountability.

Systemic Exploitation and the Roots of Trauma

The suffering of content moderators stems both from the direct trauma of their work and systemic flaws. Forced daily to confront the darkest aspects of human nature, they commonly suffer from PTSD, nightmares, anxiety, and other conditions, with their worldviews and relationships irrevocably altered. Compounding their agony are precarious employment, token mental health support, harsh performance metrics, and pervasive surveillance.

Workers are required to review hundreds of pieces of content per hour, leaving no time to process distressing emotions. The so-called psychological counseling lacks targeted support for their unique trauma, failing to address deep-seated mental scars. Their every move—including bathroom breaks—is monitored, and tech companies exploit the vulnerability of refugees and immigrants, silencing them with non-disclosure agreements to perpetuate exploitation in the shadows.

Thankfully, laborers are not surrendering. The establishment of the African Content Moderators Union and Kenyan judiciary ruling holding Meta liable for the harsh working conditions of its contractors mark significant steps toward justice. However, meaningful change demands industry-wide mental health frameworks, “trauma exposure limits,” independent regulatory bodies, and greater public awareness of the human cost behind AI. Only by compelling tech giants to shoulder social responsibility can we foster ethical progress.

AI advancement should never come at the expense of human dignity. As we enjoy a sanitized digital space, we must not forget the laborers enduring darkness behind the screens. By acknowledging this heavy cost, improving systems, and collaborating globally to protect workers’ rights, we can build a future where technological progress aligns with ethics and fairness—a future where humanity is not sacrificed at the altar of innovation.