Recently, DeepSeek officially released its multimodal visual understanding model DeepSeek-OCR 2. According to the latest news, the model is built on the new DeepEncoder V2 architecture and introduces significant improvements in image understanding, enabling artificial intelligence systems to analyze complex visual information in a way that more closely resembles human reasoning.

Unlike traditional OCR systems that rely on fixed recognition sequences, DeepSeek-OCR 2 no longer treats images as simple collections of pixels. Instead, it adopts a semantics-driven dynamic reorganization mechanism that adjusts recognition order and focus based on content meaning. This approach allows the model to maintain strong recognition stability and accuracy in challenging scenarios such as complex layouts, image distortion, overlapping elements, and unconventional document formats.

On the authoritative benchmark OmniDocBench v1.5, DeepSeek-OCR 2 achieved an overall score of 91.09%, representing an improvement of 3.73 percentage points over the previous generation. At the same time, the model has been systematically optimized for computational efficiency, with the number of visual tokens controlled between 256 and 1120, comparable to leading multimodal models in the industry. In practical engineering tests, repetition rates when processing online user logs and PDF training data were reduced by 2.08% and 0.81%, respectively, demonstrating a high level of engineering maturity.

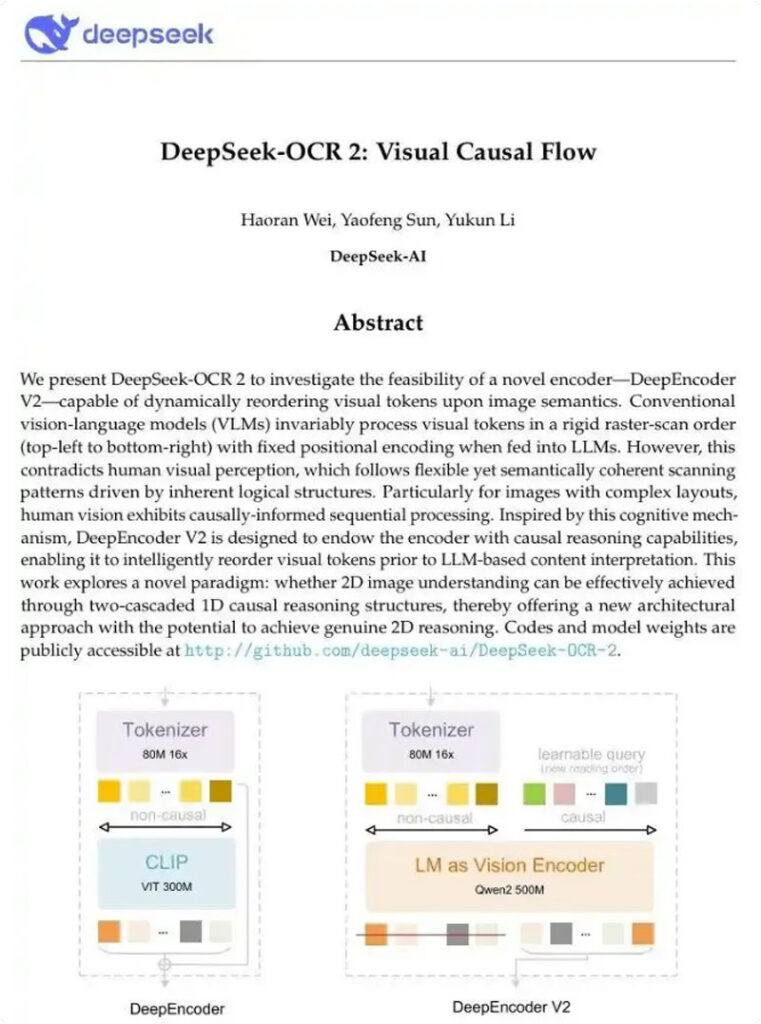

These performance gains stem from DeepSeek’s continued exploration at the AI architecture and foundation model level. DeepEncoder V2 is the first to validate the feasibility of using a language model architecture as a visual encoder, allowing the system to directly leverage established advances from large language models (LLMs), including mixture-of-experts (MoE) designs and efficient attention mechanisms. The research team notes that this design path opens new possibilities for unified multimodal AI encoding, where a single parameter framework could support coordinated encoding and compression of images, audio, and text through modality-specific learnable queries.

At the structural level, DeepSeek-OCR 2 introduces a dual-cascaded one-dimensional causal reasoning architecture, decomposing two-dimensional visual understanding into two complementary subsystems: reading logic reasoning and visual task reasoning. This division provides a clearer reasoning pathway for complex document understanding and offers a new architectural reference for enabling higher-level two-dimensional reasoning in machines.

As its capabilities continue to improve, DeepSeek-OCR 2 has demonstrated application potential across a range of real-world scenarios, including automated financial document processing, structured medical record entry, digital restoration of historical texts, and intelligent management of government archives. Its significance lies not only in improved recognition accuracy, but also in establishing a visual understanding approach that more closely aligns with human reading habits, laying the groundwork for deeper machine understanding of real-world information.