The global software industry is caught in a paradoxical dilemma: the adoption rate of AI programming tools has reached a historical peak, yet software release volume has consistently failed to show explosive growth. This contradictory phenomenon has prompted deep skepticism from a veteran programmer with 25 years of experience, who, through six weeks of empirical research, has uncovered the harsh truth behind the myth of AI-driven efficiency gains.

This developer, using the pseudonym “Code Veteran,” recounts a career that began in the era of amber monochrome screens. Upon seeing METR research suggesting developers’ actual productivity might decrease by 19%, he decided to personally verify the real impact of AI tools. Through a controlled experiment using a coin toss to decide whether to use AI, he found that data collected over six weeks showed no statistical significance; in fact, AI reduced the median development speed by 21%. “This aligns perfectly with METR’s findings. The supposed efficiency gains simply don’t exist,” he emphasized.

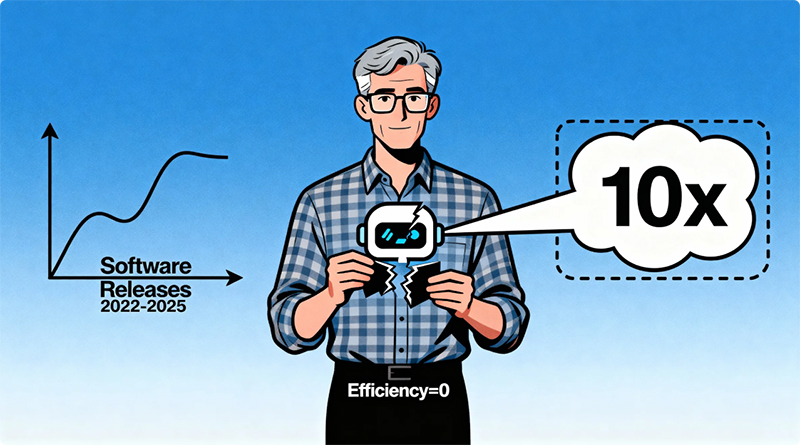

The tech market is saturated with exaggerated claims: Cursor touts “Exceptional Productivity,” GitHub Copilot promises to “Delegate Tasks Like a Boss,” and Google claims its model increases development speed by 25%. But real-world data paints a starkly different picture. Statista’s chart on global software release volume shows no “hockey stick” growth curve after the widespread adoption of AI tools in 2022; instead, the trend remains flat. Data on new game releases from Steam, domain registrations from Verisign, and open-source project updates from GH Archive all show no surge in output attributable to AI.

According to the latest news, this disconnect is having serious consequences. Multiple developers report being laid off for not adopting AI tools promptly or feeling forced to remain in unsatisfactory jobs due to market pressure. A vicious cycle is forming within the industry: tech leaders, driven by FOMO (Fear Of Missing Out), are pivoting their companies to “AI-first,” using fictional productivity narratives to justify layoffs, thereby suppressing developer salaries. More absurdly, 14% of developers claim to have become “10x engineers,” yet there is no corresponding growth in global software output.

Data processed by the experimenter for $70 via BigQuery shows no significant change in GitHub commit records after AI’s proliferation. SteamDB’s charts for game releases are similarly unremarkable, showing no boom in indie developer releases post-2023. This data directly challenges the core selling point of AI tools – if they truly boosted efficiency, the software market should be flooded with new products.

Facing industry pressure, the experimenter advises developers to stay rational: “If AI tools feel clunky to you, the data supports your intuition. Stick with what works; you are not falling behind.” He specifically notes that anyone claiming to be a “10x developer” because of AI should be asked to show concrete results: “If you can’t point to 30 applications built this year, stop the empty talk.” The experimenter systematically refutes common excuses. Addressing the claim that “prompt engineering takes time,” he cites GitHub Copilot data: the user acceptance rate for code suggestions only increased from an initial 29% to 34% after six months. Regarding the argument that “quality improved, but speed remained the same,” he points out that industry code quality has actually regressed by a decade, “hardly anyone writes tests anymore.”

This empirical research reveals not just a tech bubble, but a widespread cognitive bias across the industry. While tech leaders reshape companies with fictional productivity narratives, real developers are paying the price for ineffective tools. The experimenter concludes with a warning: “It’s 2025. These tools have been around for years and are still terrible. Unless objective evidence of mass software delivery emerges, all claims about AI efficiency gains should be considered lies.