On September 3rd, a piece of news sent shockwaves through the tech industry: Google has begun selling its TPUs to external parties.

Reports indicate that Google has recently been engaging with small-scale cloud service providers that primarily lease NVIDIA chips, urging them to also host Google’s own AI processors TPUs in their data centers. Google has already reached an agreement with at least one cloud service provider, London-based Fluidstack, to deploy its TPUs in a data center in New York.

Google’s efforts do not stop there. According to reports, the company is also pursuing similar collaborations with other service providers centered on NVIDIA, including Crusoe (which is building data centers for OpenAI) and CoreWeave—a “close partner” of NVIDIA that leases chips from Microsoft and has a supply contract with OpenAI.

On September 9th, Citigroup analysts lowered NVIDIA’s target price to $200 due to intensifying competition from TPUs, projecting that NVIDIA’s GPU sales would decrease by approximately $12 billion by 2026. It is obvious to all that the battle between Google and NVIDIA has begun—and what they are vying for is the trillion-dollar market of AI computing.

However, Google’s preparation for this battle has been longer than many might imagine.

TPU: The Optimal Solution for AI Computing?

As early as 2006, Google internally discussed the possibility of deploying GPUs, FPGAs, or ASICs in its in-house data centers. At the time, though, only a handful of applications could run on such specialized hardware, and the excess computing power in Google’s large-scale data centers was more than sufficient to meet demand. As a result, plans to deploy specialized hardware were put on hold.

By 2013, however, Google researchers made a critical observation: if users conducted voice searches daily and used deep neural networks for 3 minutes of speech recognition per day, Google’s then-existing data centers would require double their computing power to keep up with the growing demand.

Expanding the scale of data centers alone to meet this demand is not only time-consuming but also costly. Against this backdrop, Google began designing the TPU — an ASIC chip specifically built for artificial intelligence computing, with two core goals: exceptional matrix multiplication throughput and outstanding energy efficiency.

To achieve high throughput, the TPU adopts a “Systolic Array” architecture at the hardware level. This architecture consists of a grid of numerous simple processing elements (PEs). Data flows into the array from the edges and moves synchronously, step by step, through adjacent processing units with each clock cycle. Each unit performs a single multiply-accumulate operation and passes the intermediate result directly to the next unit. This design enables high reuse of data within the array, minimizing access to high-latency, high-power main memory and achieving remarkable processing speeds.

The secret to its outstanding energy efficiency lies in a software-hardware collaborative “Ahead-of-Time Compilation” strategy. Traditional general-purpose chips require energy-intensive caches to handle diverse and unpredictable data access. The TPU, by contrast, has its compiler fully map out all data paths before a program runs. This determinism eliminates the need for complex caching mechanisms, drastically reducing energy consumption. In TPU design, Google leads in defining the overall architecture and functionality, while Broadcom participates in the back-end design of some chips. Currently, Google TPUs are mainly manufactured by TSMC through contract manufacturing.

As the parameters of large language models (LLMs) expand dramatically, AI computing tasks are shifting from “training” to “inference.” At this stage, GPUs—general-purpose computing units—are beginning to show drawbacks of high cost and excessive power consumption. The TPU, designed specifically for AI computing from the start, boasts a significant cost-performance advantage. Reports suggest that the computing cost of Google’s TPU is only 1/5 of the GPU cost used by OpenAI, and its performance-per-watt ratio is even better than that of contemporary GPUs.

To seize the market, Google has built a full range of products and an ecosystem around its TPU architecture.

Google’s Decade of Chip Development

Google’s first-generation TPU (v1), launched in 2015, achieved higher energy efficiency than CPUs and GPUs of the same period through a highly simplified, specialized design. It demonstrated its high performance in projects like AlphaGo, validating the technical path of AI ASICs. As research advanced, computing bottlenecks in the training phase became increasingly prominent, prompting TPU design to shift toward system-level solutions.

The TPU v2, released in 2017, introduced the BF16 data format to support model training and was equipped with high-bandwidth memory (HBM). More crucially, the v2 interconnected 256 chip units via a custom high-speed network, forming the first TPU Pod system. The subsequent TPU v3 achieved significant performance improvements by increasing the number of computing units and introducing large-scale liquid cooling technology.

The launch of the TPU v4 brought a major innovation in interconnection technology: it adopted Optical Circuit Switching (OCS) to enable dynamic reconfiguration of the network topology within the TPU Pod, thereby enhancing fault tolerance and execution efficiency for large-scale training tasks.

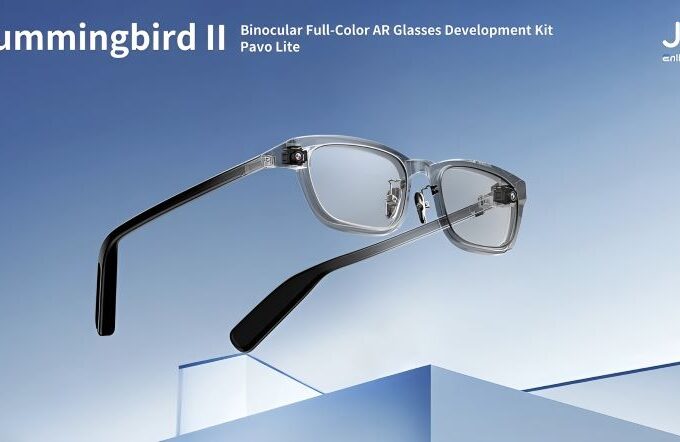

Entering the TPU v5 and v6 (codenamed “Trillium”) phases, Google adopted a differentiated product strategy for TPUs, creating two series: the “p” series, which focuses on extreme performance, and the “e” series, which prioritizes energy efficiency. This allows the TPUs to adapt to diverse AI application scenarios.

At this year’s Google Cloud Next conference, Google unveiled its 7th-generation TPU, codenamed “Ironwood.” Ironwood is Google’s most powerful, energy-efficient, and eco-friendly TPU to date, with a peak computing power of 4,614 TFLOPs, 192GB of memory, and a bandwidth of up to 7.2 Tbps. Its peak computing power per watt reaches 29.3 TFLOPs. Additionally, Ironwood supports the FP8 computing format for the first time—implementing this feature in its tensor cores and matrix math units—making it more efficient in handling large-scale inference tasks. In fact, the overall performance of Ironwood is already very close to NVIDIA’s B200, and even surpasses it in some aspects.

Of course, NVIDIA’s dominance stems not only from its hardware performance but also from its comprehensive CUDA ecosystem. Google is well aware of this, which is why it has developed JAX—a high-performance computing Python library that can run on TPUs.

To further support developers, Google also released “Pathway,” a model pipelining solution for external developers to train large language models (LLMs). Serving as an essential guide for model training, Pathway allows researchers to develop LLMs like Gemini without redesigning models from scratch. Armed with this full “arsenal,” Google is finally ready to compete head-to-head with NVIDIA.

Conclusion

The market’s positive reception of Google’s TPUs reflects a growing trend: more companies are seeking to break free from NVIDIA’s “chip shortage” dilemma, pursuing higher cost-performance ratios and more diverse, stable supply chains.

Google is not the only company challenging NVIDIA, though. Supply chain data shows that Meta will launch its first ASIC chip, the MTIA T-V1, in the fourth quarter of 2025. Designed by Broadcom, the MTIA T-V1 features a complex motherboard architecture and uses a hybrid cooling system (liquid + air).

By mid-2026, the MTIA T-V1.5 will undergo further upgrades: its chip area will double, exceeding the specifications of NVIDIA’s next-generation GPU (codenamed “Rubin”), and its computing density will be directly comparable to NVIDIA’s GB200 system. The 2027 MTIA T-V2 is expected to feature larger-scale CoWoS packaging and a high-power rack design. According to supply chain estimates, Meta aims to ship 1 million to 1.5 million ASIC units by the end of 2025 to 2026.

Microsoft and Amazon also have their own self-developed ASIC chips, and they are eyeing the market long dominated by GPUs.

NVIDIA, for its part, has launched countermeasures. In May this year, NVIDIA officially released NVLink Fusion, which allows data centers to mix NVIDIA GPUs with third-party CPUs or custom AI accelerators—a move marking NVIDIA’s first step in breaking down hardware ecosystem barriers.

Recently, Colette Kress, NVIDIA’s Executive Vice President and Chief Financial Officer, addressed the competition from ASIC chips at a conference organized by Goldman Sachs, stating that NVIDIA GPUs offer better cost-performance.

The stage is set for a major showdown. Whether for the trillion-dollar market scale or the right to define hardware structures in the latest AI trend, these tech giants have every reason to fight fiercely. For none of them can afford to lose.