Based on ChatGPT or GPT-4o, the way humans obtain information may likely change in the future. GPT-4o may have opened a super gateway for OpenAI, which could potentially impact Google. What OpenAI needs to determine next is whether the ultimate user experience is a must-have in its products.

“GPT-4o is a huge leap forward in interactive mode,” said Xu Peng, Vice President of Ant Group and head of NextEvo, to The Paper Technology on May 14. In the early hours of May 14, 2024, OpenAI showcased its latest multimodal large-scale model product—GPT-4o, with ‘o’ standing for omni, meaning all-encompassing.

Compared to existing models, GPT-4o demonstrates excellent skills in visual and audio comprehension. With the arrival of GPT-4o, there is speculation abound that we are gradually approaching the era depicted in the American science fiction film “Her.” Released in 2013, the movie tells the story of a man who falls in love with a voice assistant.

Competing With Google In Native Multimodal?

According to Mira Murati, Chief Technology Officer of OpenAI, GPT-4o can perform real-time inference in audio, visual, and text, accepting any combination of text, audio, and images as input, and generating any combination of text, audio, and images as output. It can respond to audio input in as short as 232 milliseconds, averaging at 320 milliseconds, which is similar to human response times in conversations.

In an interview with Latest, Xu Peng stated that although OpenAI hasn’t released the eagerly anticipated GPT-5, GPT-4o represents a significant advancement in interactive mode. The major difference between GPT-4o and GPT-4 is that all modalities are integrated into a single model, with finer multimodal integration and a delay of only about 300 milliseconds, while also being able to perceive emotions, tones, and expressions, enabling a more natural interaction. This requires data organization capabilities, focus breakthrough capabilities, engineering optimization capabilities, and expands people’s imagination space for interaction.

Xu Peng believes that since Google launched the native multimodal Gemini model in December last year, OpenAI has been preparing for competition in the native multimodal field. His Ant Group made a firm commitment to native multimodal technology direction earlier this year and is currently developing products such as full-modal digital humans and full-modal intelligent agents.

Native multimodal refers to using multiple modalities (such as audio, video, and images) from the beginning to train the model, rather than piecing together multimodal models.

OpenAI’s goal is to achieve deep integration of multimodality. Even in the era of GPT-3, it introduced the Whisper automatic speech recognition system as preliminary research. “Putting various modal data such as speech, images, videos, and text under a unified representation framework is a very natural way to realize their API (Application Programming Interface) in their eyes, because humans are also multimodal intelligent agents for understanding and interacting.”

Fu Sheng, Chairman and CEO of Cheetah Mobile, said that although GPT-4o may disappoint AI practitioners, it combines a series of engines, such as images, text, and sound, so users don’t need to switch back and forth. The most important thing is that the released voice assistant, due to the use of end-to-end large-scale model technology, can perceive emotional changes in real-time, interjecting at the right moments, which is the future of large-scale models.

GPT-5 May Still Face Difficulties For A While?

Xu Peng explained that native multimodal has three characteristics: end-to-end training, achieving unified training of multimodalities, being able to read, listen, and speak, and achieving complex inference. “Encoding images, text, speech, and video into a model, they have a unified representation in the model. When these data are sent to the model for training together, the model will learn various modalities. As long as their information is relevant, the internal representations are actually very close, so they will be more flexible in generation.” Xu Peng said that since the internal representations have already been integrated, GPT-4o can output generated speech at the fastest speed, achieving low-latency smooth interaction. “OpenAI’s engineering capabilities are indeed admirable. With so many modalities and a large number of input tokens, it can still output with a delay of two to three hundred milliseconds, which is a rare progress in engineering.”

Regarding the GPT-4o model, OpenAI executives have not disclosed what kind of data was used to train the GPT-4o model, nor have they revealed whether OpenAI can train the model with less computational power.

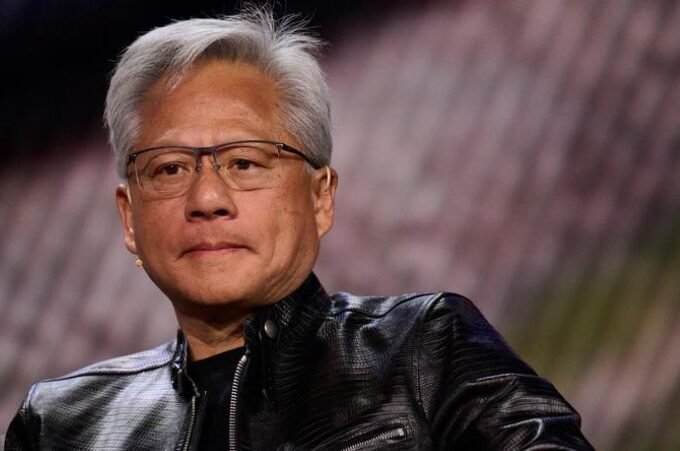

Xiong Weiming, a technology investor and founding partner of Huachuang Capital, told Latest that although OpenAI did not disclose much about the technical details of training the GPT-4o model at this press conference, it can be speculated that the implementation of such end-to-end large-scale model technology relies on powerful computational support. “It’s definitely a case of ‘great efforts lead to great achievements.’ In this regard, the American computational power market is indeed much more mature, and the capital market also supports large-scale investment in computing power.” Xiong Weiming said.

Fu Sheng believes that if parameters are stacked without regard to cost to enhance the so-called large-scale model capabilities, this path will certainly encounter difficulties. He predicts that GPT-5 may still face difficulties for a while.

Has The Super Gateway Been Opened?

According to the official website of OpenAI, the text and image functions of GPT-4o are now available for free in ChatGPT, and Plus users can enjoy five times the quota. The new version of the voice mode will be launched to Plus users in the coming weeks, and support for the new audio and video features of GPT-4o will also be gradually rolled out in the API.

In Xiong Weiming’s view, OpenAI’s product strategy can attract free users, collect a large amount of user data to feed the model training, and help further improve the product. “The user data of such interactive large models will be very rich.” On the other hand, it can cultivate users’ willingness to pay, which is also a commercial attempt.

“I think OpenAI’s attempt may change the usage habits of some users in China. Everyone may be willing to pay for the use of AI platforms.” Xiong Weiming said.

Xu Peng believes that OpenAI’s free access to services for users focuses on the native multimodal capabilities of GPT-4o. In the future, more companies can develop more natural vertical interaction products based on GPT-4o.

In the past week, there have been continuous reports from foreign media that OpenAI will launch AI search products. Although OpenAI has not launched a search engine, Xu Peng believes that based on ChatGPT or GPT-4o, the way humans obtain information may likely change in the future. GPT-4o may have opened a super gateway for OpenAI, which could potentially impact Google. What OpenAI needs to determine next is whether the ultimate user experience is a must-have in its products.

Chen Lei, Vice President of Xinyi Technology and Head of Big Data and AI, believes that from a technological perspective, the release of GPT-4o is epoch-making, truly realizing multimodal interaction, and what needs more attention is how it will be continuously grounded in subsequent commercialization. “Speech recognition and speech generation are not the most difficult, the most difficult is inference and induction. GPT-4o makes answering questions more difficult than before. Adjusting the algorithm to a certain extent can achieve smooth interaction, but thinking, reasoning, induction, and summarization like a human are manifestations of higher intelligence.”

Chen Lei also said that while China was benchmarking against GPT-4, OpenAI launched GPT-4o. The industry needs to consider how to differentiate in continuous pursuit. “We have always been chasing, and when we reach a certain level, we find that a new generation of products has been launched, and we always feel behind, so we need to adjust our mentality and find another way.” Chen Lei said. Fu Sheng believes that OpenAI’s release of GPT-4o model applications indicates that large-scale models have great potential at the application level, and the capabilities of large-scale models will continue to iterate. However, ultimately, what will make large-scale models useful is application.