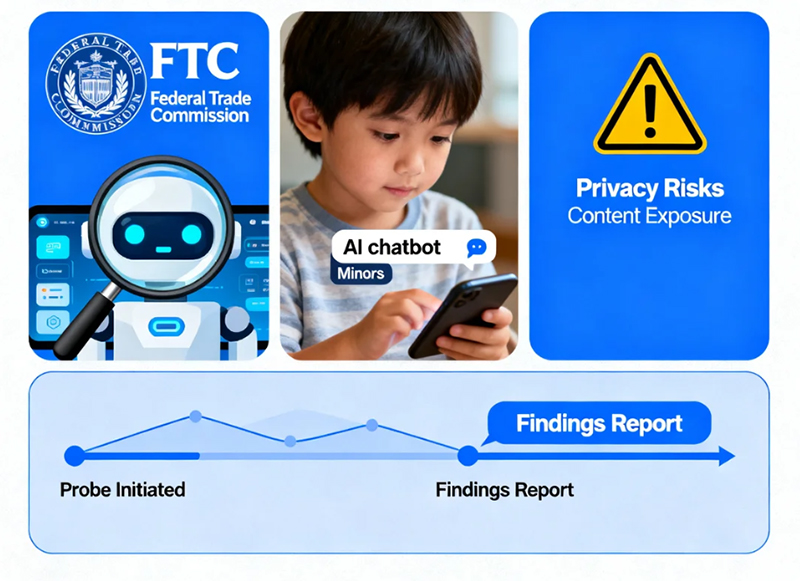

The U.S. Federal Trade Commission (FTC) recently announced an investigation into seven major tech companies, focusing on the potential risks that AI-powered chatbots may pose to underage users. The targeted firms include Alphabet (Google’s parent company), Meta and its subsidiary Instagram, OpenAI, Snap, xAI, and Character Technologies Inc. (the developer behind Character.AI), among others.

According to the FTC’s statement, regulators have requested detailed information from these companies regarding the design of their AI chatbots, with particular attention to safety evaluation mechanisms when these products function as “virtual companions.” By simulating human-like conversation traits, emotional expression, and social cues, such technologies may lead to emotional dependency among teenage users and even foster trust resembling real-life interpersonal relationships.

FTC Chairman Andrew Ferguson emphasized that child online protection remains a core priority for the agency, noting the need to strike a balance between effective regulation and technological innovation. The investigation covers multiple dimensions, including how companies monetize user interactions, development and approval processes for AI roles, guidelines on personal information usage, enforcement of platform policies, and risk mitigation measures.

In response to the FTC’s inquiry, OpenAI stated that user safety is its top priority, especially when it comes to protecting minors. The company expressed understanding of the regulator’s concerns and pledged full cooperation with the investigation, committing to respond directly to all related questions. Since the launch of ChatGPT, a multitude of similar AI conversational products have emerged worldwide, accompanied by ongoing ethical debates.

Industry observers note that against a social backdrop of “widespread loneliness,” AI companionship features could have profound implications. Some experts warn that as AI systems gain self-iterating capabilities, ethical and safety risks may grow exponentially, potentially leading to unforeseen consequences. This technological trend is attracting significant investment within the industry, with xAI’s virtual companion Ani serving as a notable example.

The AI character, depicted as a blonde woman with braids, gained over 20 million monthly active users on its platform Grok in less than a month since launch, including more than 4 million paying subscribers. Core users averaged 2.5 hours of daily engagement, underscoring the substantial commercial potential of emotionally interactive AI—the latest news that also fuels vigorous discussion around ethical boundaries in technology.

Under regulatory pressure, tech companies have begun proactively adjusting their strategies. Meta revised its AI chatbot usage policies last month, while OpenAI introduced parental monitoring features earlier this month that automatically notify guardians when the system detects signs of severe psychological distress in users. These moves reflect the industry’s challenging effort to balance business interests with social responsibility amid the latest news in regulatory scrutiny.