Who wouldn’t want a robot that can handle all household chores? It’s a dream many have had for the advancement of robotic technology.

While roboticists have managed to impressively showcase robots performing tasks like parkour in controlled lab environments, entrusting them to work autonomously in your home still raises some concerns, especially in households with children and pets. Additionally, each home has its own unique design, with varied room layouts and item placements.

Within the field of robotics, there’s a widely recognized viewpoint known as “Moravec’s paradox”: what is difficult for humans is easy for machines, and vice versa. However, thanks to artificial intelligence (AI), this situation is changing. Robots are now capable of tasks such as folding clothes, cooking, and unloading shopping baskets, tasks that were once considered nearly impossible for robots to accomplish.

According to the latest issue of MIT Technology Review, robotics as a field is at a turning point: robots are stepping out of the lab and into households everywhere. The era of household robotics is about to shine.

Affordability is Key for Household Robots

In the past, robots were synonymous with expense, with highly complex models costing tens of thousands of dollars, making them unaffordable for most families. For example, the PR2 was one of the earliest iterations of a household robot, weighing 200 kilograms and priced at $400,000.

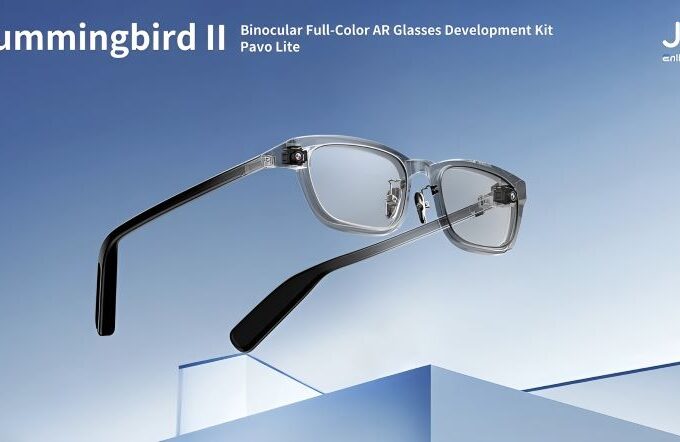

Fortunately, a new generation of more affordable robots is emerging. One such example is the Stretch 3, a new household robot developed by the American startup Hello Robot, priced much more reasonably at $24,950 and weighing 24.5 kilograms. It features a small mobile base, a mast with a camera, an adjustable arm, and an end effector with a suction cup, all operable via a controller.

Meanwhile, a research team at Stanford University in the United States has developed a system called Mobile ALOHA (Low-Cost Open-Source Hardware for Remote Operation), which enables a robot to learn how to cook shrimp with just 20 data points, including human demonstrations. The team has built more reasonably priced robots using off-the-shelf components, though still costing tens of thousands of dollars, compared to the previous models that cost hundreds of thousands.

AI Is Building A “Universal Robot Brain.”

What sets these new robots apart from their predecessors is actually their software. With the flourishing development of AI, the technological focus has shifted from achieving physical dexterity in expensive robots to constructing a “universal robot brain” using neural networks.

According to Latest report, Roboticists are using deep learning and neural networks to create “brain” systems that enable robots to learn from their environment and adjust their behavior accordingly in applications, rather than relying on meticulous planning and arduous training.

In the summer of 2023, Google introduced the Visual-Language-Action model RT-2, which obtains a general understanding of the world from online text and images used for training, as well as its own interactions, and translates this data into robot actions.

Teams from Toyota Research Institute, Columbia University, and MIT have rapidly taught robots to perform many new tasks using a learning technique called imitation learning and generative AI. This method extends generative AI technology from the fields of text, images, and videos to robot motion.

A startup called Covariant, spun off from OpenAI’s now-defunct robotics research division, has developed a multimodal model RFM-1 that accepts prompts from text, images, videos, and robot instructions. Generative AI enables robots to understand instructions and generate images or videos related to these tasks.

More Data Breeds Smarter Robots

The power of large AI models like GPT-4 lies in accumulating vast amounts of data from the internet, but this isn’t applicable to robots, as they require data specifically collected for them. They need demonstrations on how to open washing machines and refrigerators, pick up plates, or fold clothes. Currently, this data is scarce and takes a long time to collect.

Google’s DeepMind launched a new initiative called “OpenX Embodiment Collaboration,” aiming to change this. Last year, the company collaborated with 34 labs and around 150 researchers to collect data from 22 different robots, including Hello Robot’s Stretch 3. The resulting dataset was released in October 2023 and includes 527 skills for robots, such as picking, pushing, and moving.

There’s also a robot called RT-X, for which researchers have built two versions of models that can run locally on computers in various labs or be accessed via the network.

The larger, network-accessible model is pre-trained with internet data to develop “visual commonsense” from large-scale language and image models. When researchers run the RT-X model on many different robots, they find that the success rate of these robots in learning skills is 50% higher than systems developed independently by each lab.

In conclusion, more data leads to smarter robots.